Types of AI and machine learning

Artificial intelligence (AI) and machine learning are quickly evolving fields with many different approaches and applications. AI broadly refers to any technology that enables computers to mimic human intelligence, while machine learning is a specific subset of AI focusing on algorithms that can learn from data and improve with experience. There are a range of different ways to categorise AI and machine learning systems.

Categorising by capability

Narrow AI

AI models or systems designed for a specific task. They excel in the tasks they're trained for but cannot perform beyond their specific domain.

General purpose AI (foundation models)

AI models or systems that can perform a wide range of tasks without being explicitly programmed for them. These systems would ideally learn and adapt to new tasks in a manner similar to human learning. Models based on architectures like GPT or BERT can grasp a variety of NLP tasks, but may sometimes still require specific training data and fine-tuning for a new domain.

Artificial General Intelligence (AGI)

AGI refers to a type of artificial intelligence that possesses the ability to understand, learn, and perform any intellectual task that a human being can. Unlike narrow or specialised AI, which is designed and trained for a specific task, AGI can transfer knowledge from one domain to another, adapt to new tasks autonomously, and reason through complex problems in a generalised manner.

Categorising by approach

Symbolic AI

Symbolic AI, also known as GOFAI (good old-fashioned AI), was one of the earliest attempts to develop thinking machines. It involves encoding human knowledge and rules directly into a program so the computer can reason logically. The computer manipulates symbols following predefined rules, simulating aspects of human cognition like planning, problem-solving, knowledge representation, learning, and ability to manipulate language. Popular symbolic AI systems include expert systems, knowledge-based systems, and inference engines. Though effective in some narrow domains, symbolic AI struggled to scale and lacked the flexibility to handle uncertainty of the real world. It has given way to more statistical, data-driven techniques for developing general AI.

Expert Systems

Expert systems were some of the first symbolic AI systems intended to simulate and replace human expertise in specialized domains. Expert systems encode domain-specific rules provided by human experts into a knowledge base. Through logical inference, they can provide recommendations, diagnoses, and solutions much like a human consultant. Expert systems proved commercially successful in niche areas like medical diagnosis, mineral exploration, and financial services starting in the 1970s and 1980s. Limitations in adapting to new knowledge and handling complex problems eventually led to the decline of expert systems. However, they demonstrated the potential value of codifying and digitizing human expertise.

Knowledge Representation

Knowledge representation is key for symbolic AI systems to store what they know about the world in a computer-usable format. It involves representing facts, concepts, objects, events, relationships, and rules in a structured manner. Common knowledge representation schemes include semantic networks, frames, rules, and logic models. These formats enabled early AI systems to reason about knowledge similarly to humans. However, the need to manually encode enormous amounts of knowledge made symbolic AI hard to scale. Modern statistical learning techniques have eased this knowledge acquisition bottleneck.

Machine Learning (ML)

Machine learning employs statistical techniques to give computer systems the ability to learn from data without being explicitly programmed. By detecting patterns and making data-driven predictions and decisions, machine learning algorithms can improve their performance over time. Machine learning has enabled breakthroughs in computer vision, speech recognition, natural language processing, robotics, and more. There are several major types of machine learning approaches, including supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

Categorising by type of ML model

The field of machine learning has produced a range of different AI models that utilise different combinations of ML techniques.

Deep Learning and Neural Networks

Deep learning is a subset of machine learning inspired by the structure and function of the human brain, particularly neural networks. A neural network comprises interconnected nodes or "neurons". Deep learning models involve multiple layers of these networks, allowing for complex pattern recognition.

Convolutional Neural Networks (CNNs): Tailored for image processing, they excel in tasks like image recognition and classification.

Recurrent Neural Networks (RNNs): Best suited for sequential data, such as time series or natural language, as they possess a form of memory to capture information about previous steps.

Generative Adversarial Networks (GANs): Comprising two neural networks (generator and discriminator) competing in a game, GANs are adept at generating new, synthetic instances of data that can pass for real data.

Types of Models

Transformer Models

Transformer models are a novel architecture introduced in the field of deep learning, specifically tailored for handling sequences of data like text or speech. Their name is derived from their ability to "transform" input data into a meaningful context through self-attention mechanisms. The self-attention mechanism allows each data point in the sequence to focus on different parts of the sequence, thereby capturing long-range dependencies and relationships within the data. This unique ability has made Transformer models especially dominant in natural language processing tasks, outperforming traditional architectures like recurrent neural networks (RNNs) and long short-term memory networks (LSTMs).

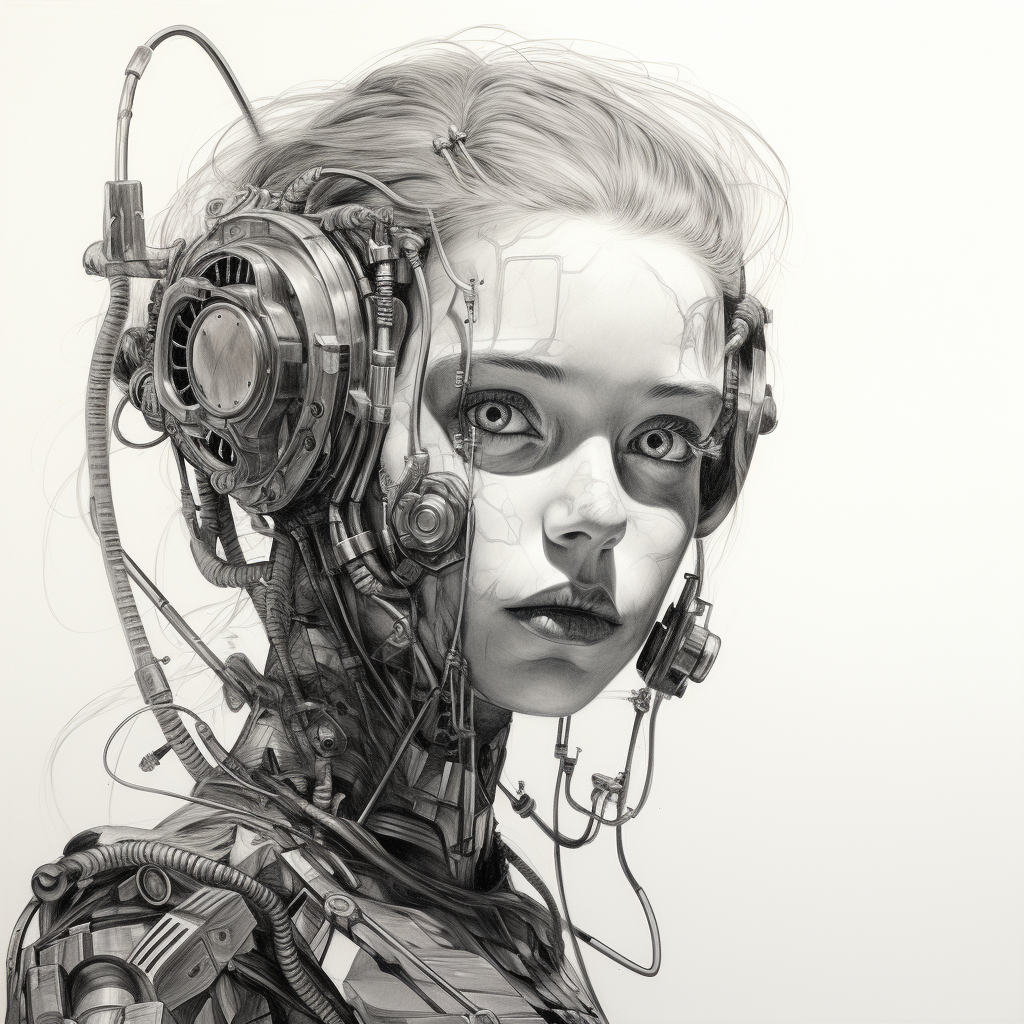

Generative AI

Generative AI refers to a subset of artificial intelligence focused on creating new content. This could be in the form of images, music, text, or any other type of data. The essence of generative AI is its ability to generate data samples that resemble, but are not identical to, the data it was trained on. Two prominent architectures within this domain are Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). GANs, for instance, involve two networks—a generator and a discriminator—competing in a sort of game, where the generator strives to produce data that the discriminator can't distinguish from real data.

Diffusion Models

Diffusion models are a recent breakthrough in the realm of generative models. Instead of generating content in a single step or iteration, as is customary with some generative models, diffusion models approach generation as a stochastic process over multiple steps. These steps can be thought of as random "diffusion" processes that transition from an initial noise to the final generated data sample. This iterative, noise-driven approach enables diffusion models to produce high-quality samples and has been demonstrated to be competitive with other state-of-the-art generative methods.

Large Language Models

Large Language Models, like OpenAI's GPT series, are deep learning models trained on vast amounts of text data. Their primary function is to predict the next word in a sequence, but due to the immense scale of their training data and architecture, they possess a profound understanding of language, enabling them to generate coherent and contextually relevant sentences, paragraphs, or even entire essays. By inputting a prompt or a series of words, users can leverage these models to produce human-like text, answer questions, create summaries, and more. Their large size, which often encompasses billions of parameters, allows them to capture intricate nuances and generalizations across languages and topics.

Machine Learning Techniques

Supervised Learning

This is the most common technique. In supervised learning, an algorithm learns from labeled training data and predicts outcomes for unforeseen data. For example, after seeing thousands of pictures of cats and dogs labeled as such, a supervised algorithm can classify a new image as either a cat or a dog.

Some popular supervised learning models include linear regression, logistic regression, decision trees, k-nearest neighbors, support vector machines, naive Bayes classifiers, neural networks, and ensemble methods. Supervised learning powers many practical AI applications from classifying images, predicting stock prices, detecting fraud, recommending products, and more. It requires a large set of high-quality training data.

Unsupervised Learning

Here, algorithms learn from unlabelled data by identifying patterns or structures. Common methods include clustering and association. Clustering algorithms like k-means are commonly used for exploratory data analysis to discover groupings in data. Dimensionality reduction techniques like principal component analysis help surface the most important factors and features. Association rule learning identifies interesting relationships between variables.

Unsupervised learning is crucial for hidden pattern recognition. For instance, a grocery store might use unsupervised learning to segment its customers into different groups based on purchasing habits.

Semi-supervised Learning

As the name suggests, this method sits between supervised and unsupervised learning. Semi-supervised learning combines a small, labelled dataset with a larger unlabelled dataset during training. This can boost model accuracy while minimizing the effort of labelling training data.

Common semi-supervised techniques include self-training, generative models, graph-based methods, and low-density separation. Semi-supervised learning is ideal when labelled data is scarce or expensive.

Reinforcement Learning

Reinforcement learning has agents learn through trial-and-error interactions with a dynamic environment. The agent explores possible actions and receives positive or negative rewards in return, learning behaviours that maximise reward. DeepMind's AlphaGo program that defeated the world champion in Go using reinforcement learning brought huge attention to the field. Reinforcement learning underlies technologies from autonomous vehicles to robots to video game AIs. It attempts to mimic how humans and animals adapt through experience.

Other commonly used AI categories

Computer Vision

Computer vision applies machine learning to analyze and understand digital images and videos. It enables computers to identify objects, scenes, faces, and activities in visual content. Object classification, object detection, image segmentation, image generation, image restoration, and scene reconstruction are key computer vision tasks. Convolutional neural networks modeled after visual cortex have made huge advances recently in computer vision. Applications include facial recognition, medical imaging, surveillance, augmented reality, and self-driving cars.

Natural Language Processing

Natural language processing (NLP) focuses on enabling computers to understand and generate human language. Key NLP tasks include automatic speech recognition, natural language understanding, natural language generation, machine translation, question answering, sentiment analysis, and document summarization. Statistical techniques and neural networks have made major progress in NLP. But challenges remain in reaching human-level conversational ability. NLP powers virtual assistants, language translation tools, text analytics, and more.

Robotics

AI and machine learning enable robots to perceive environments, plan motion, manipulate objects, and integrate capabilities for complex tasks. Locomotion, mapping, localization, motion planning, object recognition, manipulation, learning, and autonomy are active research problems in robotics. AI helps robots adapt to new situations by learning models and control policies from experience. Robotics heavily utilizes computer vision, NLP, motion control, planning, and machine learning. AI-powered robots have applications from manufacturing to medicine, but social issues around safety, ethics, and employment impact are complex.